Databricks Photon: A Practical Guide to What Works and What Doesn't

Hey there! 👋 In mid-2024, I decided to take on an interesting challenge: enabling Databricks Photon across all our jobs to see what would happen. Today, I'm sharing these insights because I believe they could help others who are considering using Photon in the Databricks environment.

Why I Tested Photon

I was curious about Photon's promises of better performance and cost savings. We had a mix of jobs running in our workspace - some heavy hitters, some medium-sized, and quite a few smaller ones. I thought, "Why not give Photon a shot and see what it can do for us?" So I took the plunge and enabled it across our jobs to gather real-world data.

What I Found: The Good, The Bad, and The Surprising

The Good News 🎉

Cost Savings Are Real - For our medium and large jobs, we saw significant cost reductions. This was especially true for jobs that were doing a lot of ShuffleHashJoin operations.

Performance Boost - Some of our complex queries that were previously taking hours to run showed noticeable improvements.

If you're interested in understanding how Photon performs so well with certain types of joins, you might want to check out my previous article where I dive deep into SortMergeJoin vs ShuffleHashJoin.

The Bad News 😅

Small Jobs Got More Expensive - Yes, you read that right! Our smaller jobs (using less than 20 CPU hours) actually became slightly more expensive with Photon enabled.

Non-Spark Jobs Don't Benefit - If you're running ML jobs or pure Python jobs that don't use Spark, Photon won't help at all. Photon is specifically designed to optimize Spark SQL and DataFrame operations, so it won't impact the performance of non-Spark workloads.

Not a Magic Bullet - Jobs with heavy spill-to-disk issues didn't see much improvement, and those scanning many small files didn't benefit much either.

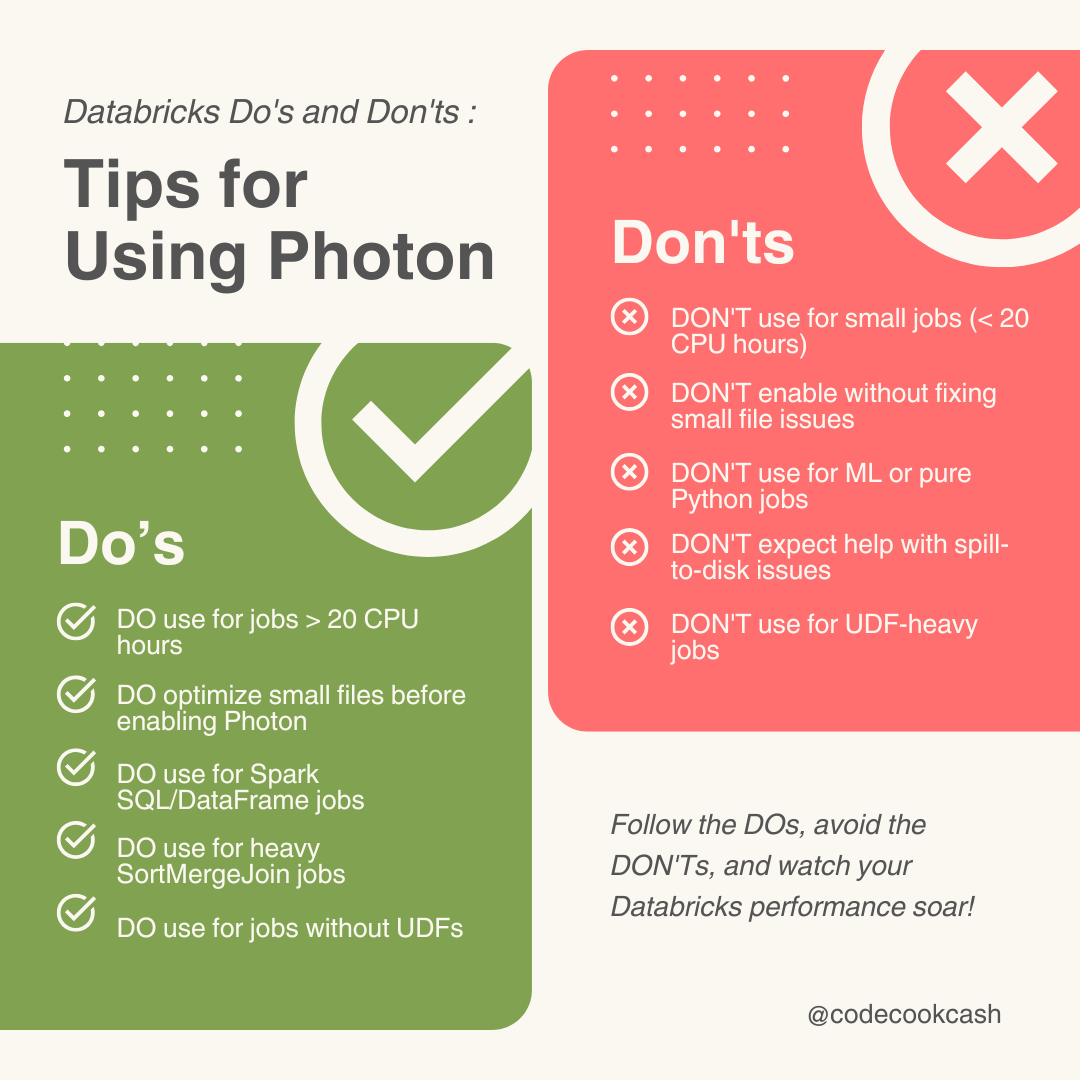

DO ✅ vs DON'T ❌

What I'm Still Figuring Out

One of the biggest challenges I'm working on is how to automatically detect which jobs would benefit from Photon. This is particularly important because many Databricks users aren't engineers and don't have deep knowledge about Spark optimization. We need a way to:

Analyze job patterns and characteristics

Predict potential Photon benefits

Automatically recommend Photon settings

Monitor and validate the improvements

This would help our centralized team apply an intelligent approach to enable Photon only for jobs that would benefit from it, saving costs without requiring any effort or technical knowledge from end users.

I've heard that Databricks Serverless might be a solution to this challenge, as Photon is enabled by default in Serverless compute and runs automatically on SQL warehouses, notebooks, and workflows. It can automatically optimize compute resources and configurations. However, I haven't had the chance to test it yet. If you're using Serverless, I'd love to hear about your experience! 🙏

Final Thoughts

Photon is a powerful feature, but it's not a one-size-fits-all solution. The key is to understand your workload and test thoroughly. For us, it's been particularly beneficial for our medium-sized jobs, but we're still learning and optimizing.

Remember: The best approach is to test Photon with your specific workloads before making any broad changes. What worked for us might not work for you, and vice versa.

Happy optimizing! 🚀

-----------------

🍜 Still here? You must really like this stuff – I appreciate it!

If you enjoyed this post, come grab another tech bite with CodeCookCash:

▶️ YouTube: youtube.com/@codecookcash

📝 Blog: codecookcash.substack.com

👋 Want more behind-the-scenes and tech-life reflections?

Connect with Huong Vuong:

💼 LinkedIn: linkedin.com/in/hoaihuongbk

📘 Facebook: facebook.com/hoaihuongbk

💡 Follow for fun – read for depth – learn at your pace.