Build Your First Python Package with Rust In 4 Hours

Introduction

Want to supercharge your Python code with Rust's performance while keeping Python's simplicity? You're in the right place! Follow along as I share how I built simple yet powerful geoprocessing functions and put them to work in real data engineering tasks.

Why Python + Rust?

The data engineering landscape is evolving rapidly with powerful Rust-based tools like Polars, DataFusion, and uv leading the way. These tools demonstrate how Rust can unlock exceptional performance while maintaining Python's ease of use. Working with uv has shown concrete benefits - faster package installations, better dependency resolution, and smoother project management.

What We'll Build

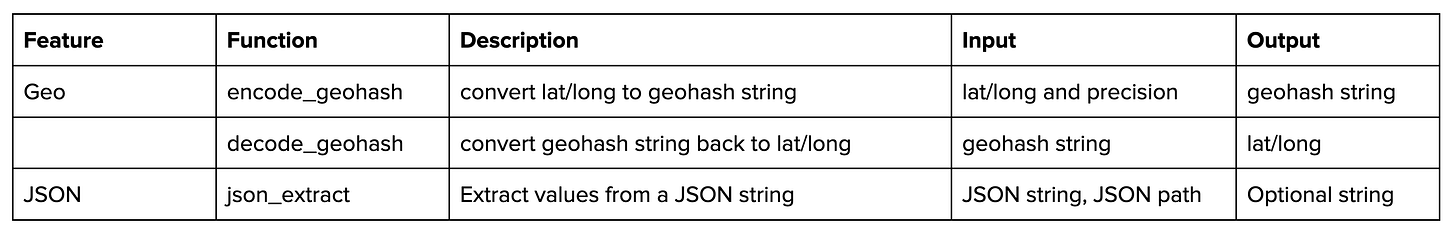

Building on my existing lakeops project, I'll add new high-performance user-defined functions for:

Geohash encoding/decoding functions

JSON extraction utilities

These functions will work seamlessly across both Polars and PySpark environments, demonstrating the power of Rust in real data engineering tasks.

My Approach

While many excellent articles explore Rust-Python integration (like this comprehensive guide), I'm taking a focused, time-boxed approach. In just 4 hours, I'll show you how to go from setup to publishing a working package.

Hour 1: Setup & Project Structure

The maturin project layout guide is an excellent resource that shows best practices for organizing mixed Python + Rust projects. It covers everything from basic to advanced layouts, helping you understand how to structure your code effectively.

For my lakeops project, I followed the "src-layout" pattern which keeps the code organized and maintainable:

Main Python code lives in src/

New Rust implementations go in rust/

Clear separation between Python and Rust components

lakeops/

├── src/ # Python code

│ ├── lakeops/

│ │ ├── __init__.py

│ │ └── udf/

├── rust/ # Rust code

│ ├── src/

│ │ ├── lib.rs

│ │ └── udf/

│ └── Cargo.toml

└── pyproject.toml # Python package metadata

I updated the pyproject.toml to use maturin as the build backend:

[build-system]

requires = ["maturin>=1.0,<2.0", "hatchling"]

build-backend = "maturin"I chose maturin as the build backend for lakeops because it simplified the Python-Rust integration process significantly compared to a few years ago. The detailed benefits of maturin's GitHub Actions support will be covered in Hour 4 when we set up CI/CD.

Let's move on to implementing our first Rust functions!

Hour 2: Writing the Rust Code

Yes! As a Rust beginner writing my first Rust code, I leveraged Cody (Sourcegraph's AI assistant) to accelerate development. This helped me focus on implementing the functions while learning Rust syntax and best practices along the way.

Time to write our Rust functions! I'll implement two types of functions in the lakeops project:

Let's start with the Rust code structure in rust/src:

rust/

├── src/

│ ├── lib.rs # Python module registration with PyO3

│ ├── geo/ # Geospatial module

│ │ ├── mod.rs

│ │ └── functions.rs

│ └── json/ # JSON module

│ ├── mod.rs

│ └── functions.rs

└── Cargo.tomlThis modular approach keeps our code organized where:

Each module (geo, JSON) contains its specific functionality

functions.rs holds the core implementations

mod.rs handles module exports

lib.rs wraps everything into a Python module using PyO3 (read more about PyO3 - a Rust python binding here)

Here's how I integrated the Rust modules into the lakeops Python package structure: the lakeops/udf/__init__.py imports and exposes the Rust functions, making them available through lakeops.udf.

from lakeops._lakeops_udf import (

point_in_polygon,

calculate_distance,

json_extract,

is_valid_json,

encode_geohash,

decode_geohash,

)

__all__ = [

'point_in_polygon',

'calculate_distance',

'json_extract',

'is_valid_json',

'encode_geohash',

'decode_geohash',

]Hour 3: Building & Testing

Here's my testing and build process:

Configured maturin settings to package both Rust and Python code:

[tool.maturin] features = ["pyo3/extension-module"] module-name = "lakeops._lakeops_udf" python-source = "src" manifest-path = "rust/Cargo.toml"Updated Makefile to trigger maturin build:

build: @echo "Building the project" @uv tool run maturin developTesting geohash function

def test_geohash(): lat, lon = 37.7749, -122.4194 # San Francisco hash = encode_geohash(lat, lon, 7) assert len(hash) == 7 decoded_lat, decoded_lon = decode_geohash(hash) # Test relative difference assert abs(decoded_lat - lat) / lat < 0.01 # 1% tolerance assert abs(decoded_lon - lon) / abs(lon) < 0.01 # 1% toleranceTesting JSON functions with PySpark

def test_json_functions(spark, test_data): result = test_data.selectExpr( "json_extract(metadata, '$.name') as store_name", "json_extract(metadata, '$.details.category') as category", "is_valid_json(metadata) as valid_json", ).collect() assert result[0].store_name == '"Store A"' assert result[0].category == '"retail"' assert result[0].valid_json == True assert result[1].store_name == '"Store B"' assert result[1].category == '"wholesale"' assert result[1].valid_json == True def test_sql_query(spark, test_data): test_data.createOrReplaceTempView("stores") result = spark.sql(""" SELECT point_in_polygon(point, polygon) as is_inside, json_extract(metadata, '$.details.id') as store_id FROM stores WHERE point_in_polygon(point, polygon) = true """).collect() assert len(result) == 1 assert result[0].store_id == "123"

These tests verify our Rust functions work correctly in both Polars and PySpark environments!

Hour 4: CI/CD & Publishing

This step was crucial and challenging! Adding Rust code to the Python project meant dealing with CPU architecture dependencies (ARM and AMD). I needed to update the lakeops CI to support multi-architecture builds and deployments to GitHub/PyPI.

Coming from a GitLab background, this was my first deep dive into GitHub Actions. The learning curve was steep, but the results were worth it! The CI now builds wheels for:

Linux (x86_64, aarch64)

macOS (x86_64, aarch64)

Windows (x86_64)

Let's look at how I configured the GitHub Actions workflow to handle these cross-platform builds!

Matrix Strategy for Cross-Platform Builds

I learned that using a matrix in GitHub Actions provides an efficient way to manage multiple builds through templating:

jobs:

build:

strategy:

matrix:

os: [ubuntu-latest, macos-latest, windows-latest]

target: [x86_64, aarch64]

python-version: ["3.10"]

exclude:

- os: windows-latest

target: aarch64

This matrix configuration:

Builds across different operating systems

Handles multiple CPU architectures

Supports specific Python versions

Excludes unsupported combinations

The matrix approach lets us generate all necessary wheels in parallel, making our CI pipeline more efficient.

Building Wheels with maturin-action

For each matrix combination, I use PyO3/maturin-action to build the wheels:

- name: Build wheel

uses: PyO3/maturin-action@v1

with:

command: build

target: ${{ matrix.architecture }}

args: --release --out dist -i python${{ matrix.python-version }}This action configuration:

Uses the explicit build command

Targets specific architectures from the matrix

Builds release wheels into the dist directory

Specifies Python version for compatibility

When running GitHub Actions, each OS runner has a default CPU architecture:

macos-latest → ARM

windows-latest → x86_64

ubuntu-latest → x86_64

This created a challenge for building ARM wheels on ubuntu-latest since the host is x86_64. The solution is using Docker-based builds with maturin-action:

- name: Setup QEMU

if: matrix.os == 'ubuntu-latest'

uses: docker/setup-qemu-action@v2

- name: Build wheel

uses: PyO3/maturin-action@v1

For more details on this challenge and solutions, check out the official maturin-action documentation.

Let's look at how we handle the artifacts after building!

Handling Build Artifacts

After successful wheel builds, I upload the artifacts:

- name: Upload wheel

uses: actions/upload-artifact@v4

with:

name: wheels-${{ matrix.os }}-${{ matrix.architecture }}-${{ matrix.python-version }}

path: dist/*.whl

This step:

Names artifacts uniquely based on matrix values

Uploads all wheel files from the dist directory

Makes wheels available for the publishing workflow

The unique naming helps track which wheels came from which build combination. Let's look at how I publish these wheels to PyPI!

Publishing to PyPI

I split the publishing workflow into two stages for better control:

Stage 1: Build and Upload Artifacts

Stage 2: Release to PyPI

The release stage efficiently collects and publishes all our wheels:

- uses: actions/download-artifact@v4

with:

path: dist

merge-multiple: true

- name: Publish to PyPI

uses: pypa/gh-action-pypi-publish@release/v1

Yooo!!!, now users can install the lakeops package with all platform-specific!

Conclusion

Building this Python package with Rust was an enriching journey that taught me valuable lessons about:

Writing and debugging Rust code

Leveraging LLMs for development assistance

Working with maturin for Python-Rust integration

Mastering GitHub Actions for multi-architecture builds

Publishing platform-specific packages

The time invested went beyond 4 hours, but the knowledge gained in cross-language development, CI/CD practices, and modern development tools made it incredibly worthwhile.

Want to explore deeper? Check out the code at github.com/hoaihuongbk/lakeops.

Try the new functions yourself:

pip install lakeops==0.1.7I'd love to hear your feedback and experiences! Feel free to open issues or discussions in the GitHub repository.